Transluce’s New Tool: A Game Changer for AI Transparency

Transluce, a groundbreaking non-profit research lab, has recently unveiled an innovative tool that offers insights into neuron behavior in LLMs. Their mission is to understand the “thought process” behind unexpected AI behavior, predict and address model problems, and uncover hidden biases and correlations.

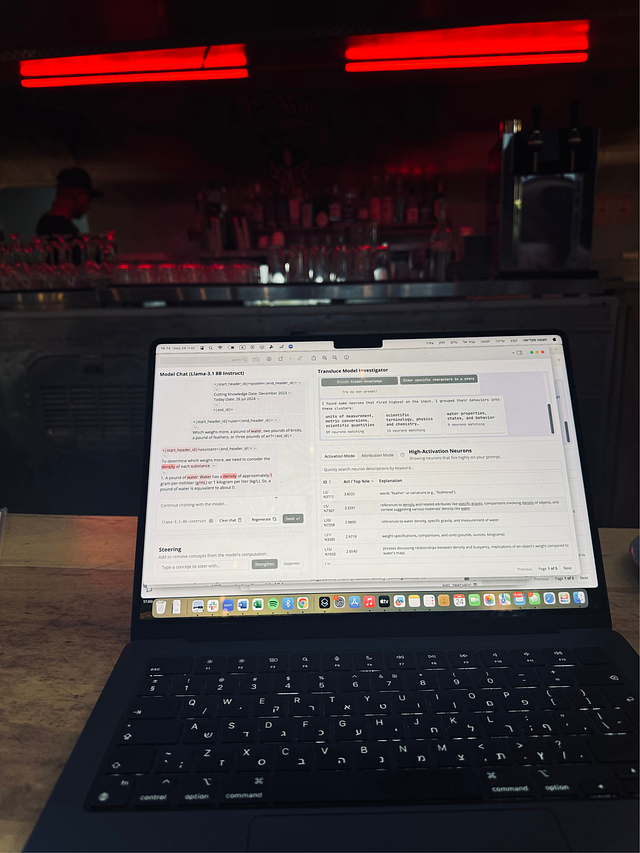

To achieve this goal, they have introduced an observability interface where users can input prompts, receive responses, and examine activated neurons. Their unique approach enables the automatic generation of high-quality neuron descriptions inside language models.

If you want to test the tool and explore tutorials, visit here.

Let’s delve deeper into how this tool works by sharing a test case experience.

Activation and Attribution:

Activation: Measures the normalized activation value of the neuron. By normalizing activations, users can understand positive or negative behaviors. This normalization is based on large datasets.

Attribution: Measures the impact of the neuron on the model’s output. Attribution values are not normalized and are determined by the gradient of the output token’s probability with respect to the neuron’s activation.

These features provide insights into model and neuron behavior, allowing users to identify patterns and clusters of phenomena.

During the test case, a simple logic question was used:

Q: “Alice has 4 brothers and 2 sisters. How many sisters do Alice’s brothers have?”

Despite initial incorrect answers, by steering and adjusting neuron concepts related to gender roles and irrelevant factors like chemical compounds, the tool eventually produced the correct response. While the reasoning process may have been unconventional, the correct answer was achieved, showcasing the tool’s potential for enhancing AI understanding.

In conclusion, despite some trial and error, the tool’s capability to observe and manipulate neuron behaviors in real-time is both impressive and promising. This level of transparency and control opens up new possibilities for improving AI models and understanding their inner workings.